The feedback from all this data is channeled to the brain of the hardware system, an AI neural network that produces steering and acceleration commands. What that looks like has been recreated in a recent YouTube video by a Tesla white-hat hacker verygreen and someone called DamianXVI.

ALL BUT A SENSE OF SMELL

The new Autopilot 9.0 system in Tesla’s vehicles is designed to be almost omniscient regarding its surroundings. Using sight, sound, radar, and inertia-sensing units, it rapidly picks up objects, figures out what they are, quantifies them in time and space, that is, their location, how fast they are going, and in what direction. It then sends all that data to a computer that makes the driving decisions. Before we see just how complicated that process can be in a moving vehicle, let’s look at the basic hardware in the system.

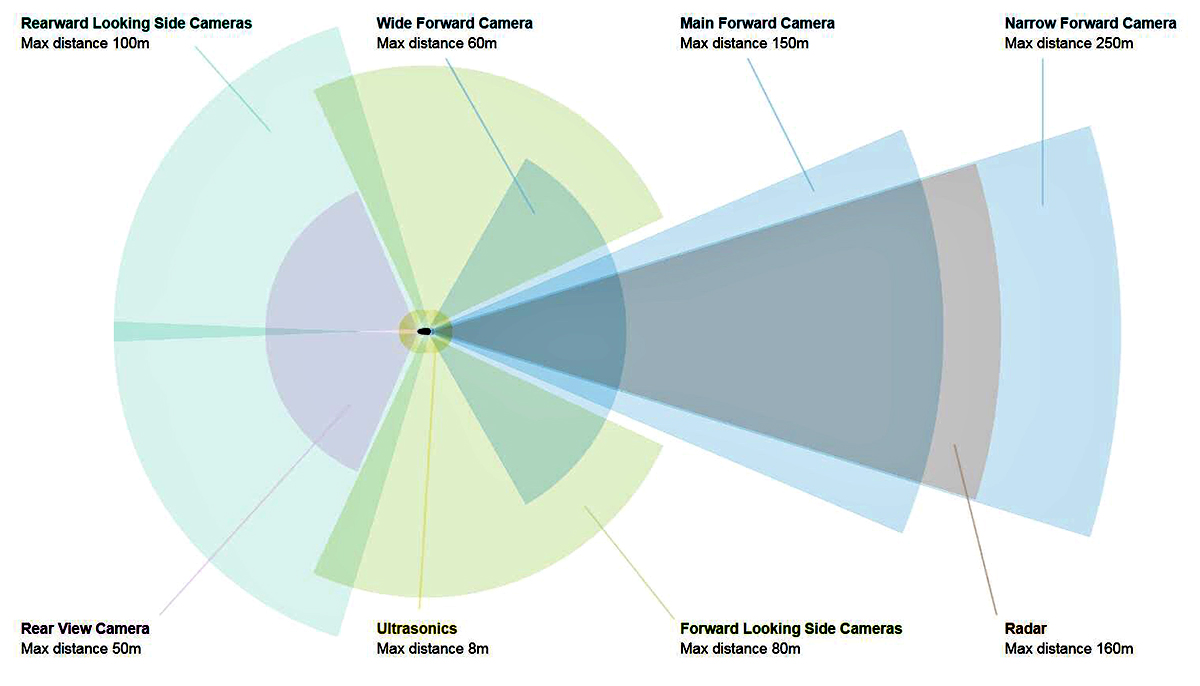

Coverage provided by the on-board sensors. Image/Wikipedia Commons

Eight cameras provide 360-degree coverage. Three forward-facing cameras are mounted in cutouts on the back of the rear-view mirror. The main forward camera has a maximum distance of 150m (492 ft.) with a 50-degree field of view. The narrow forward camera looks ahead 250m (820 ft.) with a 35-degree field of view. And the third forward camera provides a wide-angle view at 150-degrees while looking ahead 60m (197 ft.).

On the outside, two side-mounted cameras are on each side, and one is on the rear deck. The side mounts are hidden in the Tesla medallions on the front fenders and in the pillars between the front and rear windows. These exterior cameras have heaters to prevent them from being blinded by snow or ice. The ultrasonic listening devices have a range of 8m (26 ft).

Unlike a number of its competitors, Tesla has decided to use radar rather than LIDAR, and its sensor, with a range of 160m (525 ft.), is in the rear-view mirror with the front-facing cameras. A LIDAR (light detection and ranging) system measures the distance to an object by sending a pulsed laser light and then measuring the pulse reflected back. Radar uses pulses of high-frequency electromagnetic (radio) waves instead of light.

A VISUAL TOUR

In comments about their YouTube re-creation, verygreen and DamianXVI offer several caveats. It isn’t from Tesla, and no one from Tesla assisted in its making. A dedicated follower of Tesla, posting on reddit as greentheonly, the big break for verygreen came when he bought an Autopilot Hardware 2.5 computer on eBay that turned out to be a fully unlocked developer version of the hardware for the Autopilot system. He explained on the Tesla subreddit thread, “So keep in mind our visualizations are not what Tesla devs see out of their car footage, and we do not fully understand all the values either (though we have decent visibility into the system now as you can see).”

Based on their research and the Autopilot computer, this is the video of a Tesla being driven through the streets of Paris. The re-creation only shows the visual data from the front-facing cameras.

If you freeze the video, you can read the identifications and their data points. The colors of the bounding boxes help identify object types even when they are distant: red equals vehicles, yellow equals pedestrians, and blue equals motorcycles or bikes. The software has an extensive library of recognizable objects, but it isn’t perfect. Green points out some interesting hits and some false positives in his posting. Traffic cones are recognized as shaping the driveable space, but a container on the side is mistaken for a vehicle and a pedestrian in a red jacket isn’t detected at all.

Posting on electrek.co (a news site tracking the transition to electric vehicles), editor Fred Lambert said of the video, “It’s not perfect, but it’s the best we have now. Hopefully, Tesla and those other companies become more open about the development of their autonomous driving systems. I think it would go a long way in making people better understand the systems and their limitations.”

What is clear from the video is that compared to humans, the Autopilot is far more comprehensive in what it sees and hears, and it’s relentlessly attentive. After a few minutes, did you find yourself checking out the monuments and architecture of the historic city? We also have a proclivity toward something called DWAM (driving without attention mode), during which “the driver performs in a state in which he has no active attention for the driving task and performs on ‘autopilot’,” according to the European Commission—Mobility and Transport. That’s a very different kind of autopilot.

WHAT A HUMAN SEES

For a different perspective of the new Tesla Autopilot 9.0, several videos have been posted by Jasper Nuyens’s including this interior/exterior view of a Tesla running the latest version of the Autopilot software. Shot on a highway in the Netherlands, this clip shows the lane-changing options on Autopilot. There are several of these clips by Nuyens on YouTube, and although the video quality on this one isn’t stellar, he does explain more about the system and how it reacts to his commands along with its own autonomous systems.

Click to watch.

Video: YouTube Jasper Nuyens

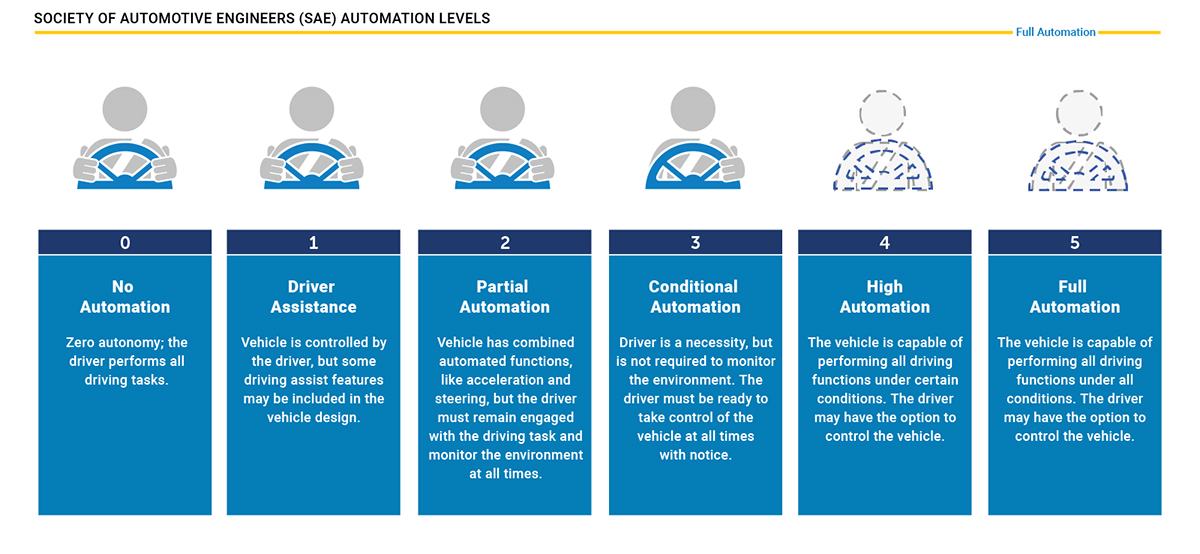

The Tesla and numerous other cars are at various stages, but many are close or actually have achieved level-5 full autonomous driving stage. But none are ready yet for commercial distribution.

Click to enlarge.

Image: National Highway Traffic Safety Administraton

The next dimensions to be tackled are systems that will allow autonomous cars to communicate information to other autonomous vehicles in the vicinity to protect all from each other, and the changes in roadway design to help maximize these kinds of vehicles.