AI is broadly thought of as the creation of intelligent machines that can analyze large amounts of data and follow a set of rules defined by algorithms that enable machines to engage in problem-solving activities. AI systems include developing software to distinguish between and engage certain traits such as knowledge, reasoning, problem solving, perception, learning, and planning.

Given the focus on judgment and decision making, AI systems should be built around ethical principles that provide guidance on collecting data, processing it, and reporting the results. The collection of data for processing by AI systems should be done objectively and with confidentiality assured. From an auditing perspective, the data should be trustworthy and verifiable.

Because of the impact AI systems can have, accounting educators should commit to teaching students about AI’s influence on data gathering, processing, and reporting and how it can be used to improve professional judgment and decision making, particularly in the areas of tax, auditing, and advisory services. Students don’t need to be experts in AI and machine learning, but they should be confident interpreting data that has been analyzed with the use of AI systems.

It’s important to stay ahead of the ethical issues surrounding the use of AI systems and the key related areas of AI that can be taught, such as the benefits of using AI for accounting and finance professionals, governance and accountability, ethics and risk analysis, regulation, and practical steps for integrating data analysis and AI into the accounting curriculum.

BENEFITS OF USING AI

In “Meeting the Challenge of Artificial Intelligence,” Paul Lin and Tom Hazelbaker wrote that each of the Big Four accounting firms are using AI in a variety of projects (The CPA Journal, June 2019). Examples they mentioned include the following:

- Automating the process of reviewing and extracting relevant information from various documents (Deloitte).

- Using deep learning to analyze unstructured data such as emails, social media posts, and conference call audio files (EY).

- Developing an AI-enabled system capable of analyzing documents and preparing reports (PwC).

- Developing tools to integrate AI, data analytics, cognitive technologies, and robotic process automation (KPMG).

AI can be used to look for fraud by analyzing large amounts of data to determine whether any material misstatements exist. This can be done by identifying outliers in data, or anomalies, with the help of machine learning. For example, an employee might submit a request to be reimbursed $100 for a business meal at a local restaurant. The AI system could be programmed to flag unusual amounts for further scrutiny. After all, it’s unlikely that the bill is exactly $100, and it could be that the $100 payment was for the purchase of a gift certificate in this amount.

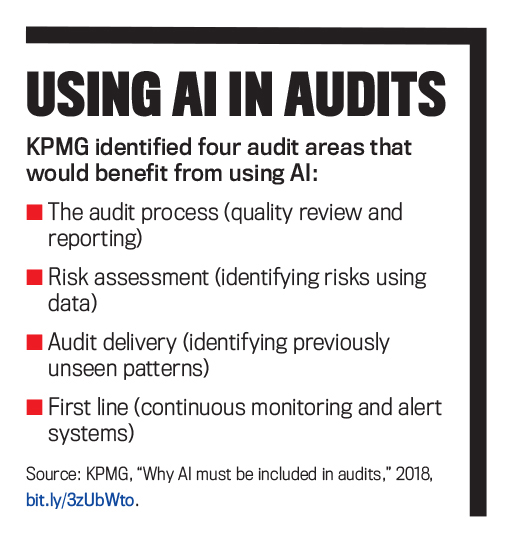

Management accountants need to understand the role of internal controls and risk assessment and whether ethical decisions have been made using AI data. Internal auditors need to assess whether the controls are operating as intended and whether effective governance systems are in place (i.e., the role of the audit committee). (For more on AI and audits, see “Using AI in Audits.”)

Finance leaders should embrace new technologies to enhance digital transformations. CFOs play an important role in applying the new technologies to decision-making areas, such as predicting real values of financial assets and return on investment, identifying material misstatements that can be predicted through algorithms, and enhancing organizational efficiency.

We should expect the number of activities that can be addressed by AI to continue to increase. What has been missing from the articles written on these issues is what role ethics should play in learning about AI and pedagogical issues.

GOVERNANCE AND ACCOUNTABILITY

As I pointed out in “Ethical AI is Built on Transparency, Accountability and Trust” (Corporate Compliance Insights), governance and accountability issues fall into certain categories:

- Ethics standards for AI,

- Governance of the AI system and data,

- Internal controls over data,

- Accountability for unethical practices,

- Compliance with regulations, and

- Reporting findings directly to the audit committee or the board of directors.

In “AI: New Risks and Rewards” (Strategic Finance, April 2019), Mark A. Nickerson raised an important question: “Is the individual still ultimately going to be held to the highest standards of fiduciary responsibility and due care even in those instances where decisions and analyses were executed by AI systems?” Someone or some group needs to be held accountable to ensure proper standards are followed and adjustments are made based on an audit of AI systems.

ETHICAL RISKS

Ethics and AI systems should be addressed in the context of the mission of the organization and included in the provisions of a code of conduct. This would help to determine the goal of using algorithms and who will be accountable for their use. The key is to define the responsibilities in the organization for the processing, analyzing, and decision making and how the data was introduced into the system: Is it representative of the sample or biased in some fashion?

AI systems are often referred to as a “black box,” as it can be difficult to fully understand the complex calculations and factors that lead to a particular decision or prediction. AI automates judgments—yes/no; right/wrong. These judgments should be made in an ethical way to promote responsible decision making.

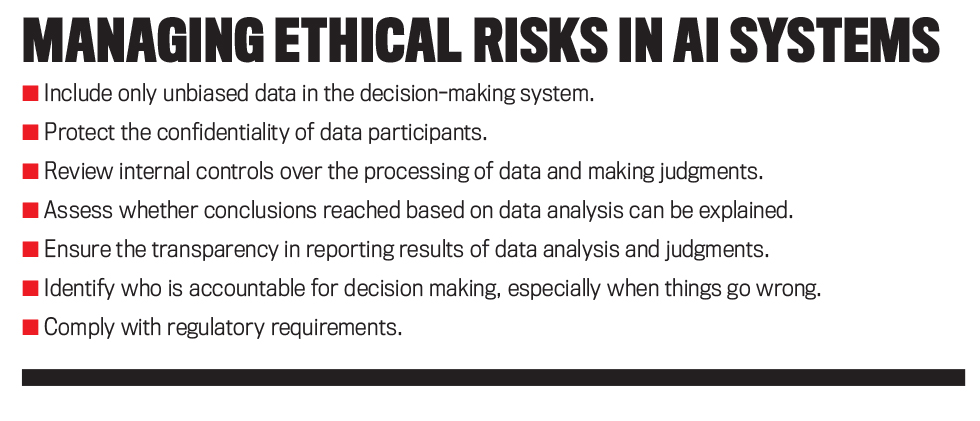

Ethical risks in these AI systems range from how the data is collected and processed to the validity of conclusions reached from the analysis of data. Bias is a potential problem if, for example, ZIP codes are used to analyze good risks for mortgage loan lending. It could be that people living in one community are the least risky borrowers, but building their ZIP codes into an AI decision-making model may result in bias against those living in minority communities. Managing the ethical risks in an AI system, as outlined in “Managing Ethical Risks in AI Systems,” is similar to managing those risks involved in any decision-making system.

RISK MANAGEMENT

Given their broad scope, it’s essential that ethical risks be managed to enhance the reliability of decision making and to promote useful machine learning. Machines learn based on the data processed. If the data sample isn’t representative or accurate, then the lessons they learn from the data won’t be accurate and may even lead to unethical outcomes.

Risk management in AI systems starts by creating an organizational culture that supports good governance. According to the Institute of Internal Auditors (IIA), the governance of AI systems encompasses the “structures, processes, and procedures implemented to direct, manage, and monitor the AI activities of the organization” (Global Perspectives and Insights: The IIA’s Artificial Intelligence Auditing Framework). Governance structures vary depending on the scope of usage in an organization, but certain ethical principles should be followed to determine whether governance structures and processes are fulfilling their oversight role including accountability, responsibility, compliance, and meeting the standards of an ethical framework.

ROLE OF CFOs

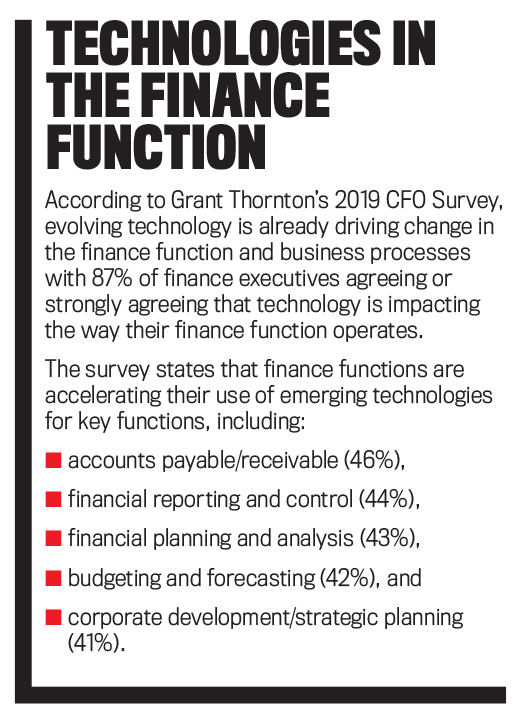

Grant Thornton’s 2019 CFO Survey (see “Technologies in the Finance Function”) found that a significant percentage of senior financial executives currently implement technologies such as advanced analytics (38%) and machine learning (29%). Year-over-year comparisons indicate that 42% of CFOs surveyed reported that their finance functions regularly make use of advanced and automation technologies in corporate development and strategic planning, compared to 18% in the 2018 survey.

There’s no doubt that CFOs will play an increasingly important role as financial leaders to ensure their organizations are using AI to facilitate strategic initiatives and operate more efficiently. Nearly 91% of respondents agree or strongly agree it’s the CFO’s job to ensure that their companies fully realize the benefits of technology investments. Ninety-five percent of respondents said their company’s CFO is a key stakeholder of enterprise transformation planning.

Financial leaders should embrace new technologies to enhance digital transformations. CFOs should play an important role in applying the new technologies to decision-making areas such as predicting the real values of financial assets and return on investment, identifying material misstatements in financial statements that can be predicted through algorithms, and enhancing organizational efficiency.

Learning about how AI systems process data and reach conclusions is a must for accounting students, whether they plan to work in public accounting, private industry, or the government sector. The profession’s future leaders should drive the innovation so that organizations can integrate AI systems into both financial and managerial decision making. Accounting educators have an important role to play and should educate themselves on the ethical use of AI.

REGULATION

The U.S. Office of Management and Budget released a draft memorandum on January 13, 2020, providing guidance to agencies on how to approach regulation of industry’s AI applications. The key issue is that regulatory action shouldn’t hinder the expansion of AI. The memo states: “Agencies must avoid a precautionary approach that holds AI systems to such an impossibly high standard that society cannot enjoy their benefits. Where AI entails risk, agencies should consider the potential benefits and costs of employing AI, when compared to the systems AI has been designed to complement or replace.”

This cost-benefit analysis approach to ethical decision making in the AI realm suffers from the shortfalls of all utilitarian assessments. With AI, how can the potential costs of bias in the algorithms be measured? The potential harm to some stakeholders is real, including those who have been discriminated against because the algorithms include variables that may be harmful to their interests.

Effective regulation can be addressed by industry (for example, healthcare and financial services) or for all areas collectively. This is the challenge for regulators. Given the myriad of applications of AI systems in consumer and business decision making, the question is whether AI should be regulated and, if so, how to do it.

So-called “soft laws” establish frameworks for oversight but aren’t directly enforceable by government, which would include the IMA Statement of Ethical Professional Practice or the American Institute of Certified Public Accountants Code of Professional Conduct. Regulators should pay attention to established standards such as those embodied in the IMA Statement: competence, confidentiality, integrity, and credibility.

The usage of certain technologies should be regulated, or at a minimum monitored, to prevent the misuse or abuse of the technology toward harmful ends. Lawmakers and regulators in the United States have primarily pursued AI in autonomous or self-driving vehicles. The potential harm for injury is high if autonomous vehicles fail to protect lives in all possible scenarios.

Concerns about potential misuse or unintended consequences of AI have prompted efforts to examine and develop standards, such as the U.S. National Institute of Standards and Technology. This initiative involves workshops and discussions with the public and private sectors around the development of federal standards to create building blocks for reliable, robust, and trustworthy AI systems.

State lawmakers also are considering AI’s benefits and challenges. A growing number of measures are being introduced to study the impact of AI and the potential roles for policy makers. General AI bills or resolutions were introduced by at least 14 states in 2020, all of which failed or are pending resolution.

PRACTICAL STEPS

Accounting students need to know how AI systems can be used to improve judgments and enhance ethical decision making. The profession’s future leaders should guide organizations to integrate AI systems into both financial and managerial decision making. This means that today’s accounting educators have an important role to play and should educate themselves on the ethical use of AI now.

The overall objective of curriculum coverage of AI should be to teach students how to become comfortable with the application of AI in decision making and related ethical considerations. There are two ways to do so: integration throughout the curriculum or a stand-alone course. Ideally, both should be done, but that may be unrealistic given curriculum constraints at most colleges and universities. Either way, the earlier the AI implications are introduced, the more likely accounting graduates will understand the dangers that exist with its use.

Most accounting programs have accounting information systems (AIS) courses in their curriculum, but these are typically upper-junior or senior-level courses. Broad topical coverage generally includes: (1) collection and storage of data concerning an organization’s financial activities; (2) developing the ability to apply critical thinking and make judgments using AIS-generated data; (3) developing effective information systems controls; and (4) auditing AIS.

Separate AIS courses can provide a good place to integrate AI information in the context of decision making, but more than that is needed. Integration throughout the curriculum demonstrates how data produced from AIS systems can be applied in a variety of topical areas including financial and managerial accounting and auditing courses.

AI is a subset or a topic within data analytics. Data analytics has now been introduced as a topic in virtually all new accounting textbooks, starting with introductory accounting. Integration across the curriculum of data analytics is possible. There are also many programs and data analytics required courses and electives such as electronic data processing (EDP) auditing.

What’s needed now is to ensure that ethics is integrated into the coverage. Too often topics are added with no consideration for the ethics surrounding the topic. With data analytics, there are ethical considerations surrounding the confidentiality of the data, how the data is being collected, and how the data is used. Given that the output of the analysis by AI can be inaccurate, discussions should focus on whether data is biased in a way that leads to poor and unethical decisions being made.

Assuming the integration approach, Table 1 summarizes selected areas of the curriculum and how ethical issues can be addressed. This isn’t meant to be a complete listing of topics; it’s only to provide educators with some recommendations about integration. These topics can also be incorporated into a stand-alone course.

Financial statement analysis entails calculating ratios and other comparative data to detect anomalies and trends in the data. Representational faithfulness is a critical component of the reliability of data. It generally means the correspondence or agreement between the accounting measures or descriptions in financial reports and the economic activity they’re meant to represent. The characteristics of representational faithfulness are completeness/full disclosure, fairness/freedom from bias, and freedom from error or omissions.

Ethics and AI should go hand in hand. If the data gathered by AI systems is biased or incomplete, the analyses using the data can’t be relied upon. Concepts such as fairness, objectivity, professional skepticism, and representational faithfulness underlie the ethics of AI and decision making in accounting. Accounting educators who want to truly prepare their students for tomorrow’s workplace must incorporate AI into as many areas as possible and be sensitive to the ethical issues discussed throughout.

August 2021