The PCAOB was charged to “establish auditing and related attestation, quality control, ethics, and independence standards and rules to be used by registered public accounting firms in the preparation and issuance of audit reports.” SOX also mandated numerous steps to reform the public accounting industry, including establishing standards for auditor independence, enhanced financial disclosures, and criminal fraud accountability.

While these regulations and independent entities such as the PCAOB have largely proven successful over the last 15 years, the implementation of AI stands to open up a considerable host of issues relating to analytical work, strategic decision making, and more in the financial environment. According to a recent MIT Sloan Management Review in collaboration with The Boston Consulting Group survey , almost 85% of the more than 3,000 executives surveyed expect AI—technology and computer systems that can perform tasks that normally require human intelligence—will give them a competitive advantage, while 79% believe it will increase productivity.

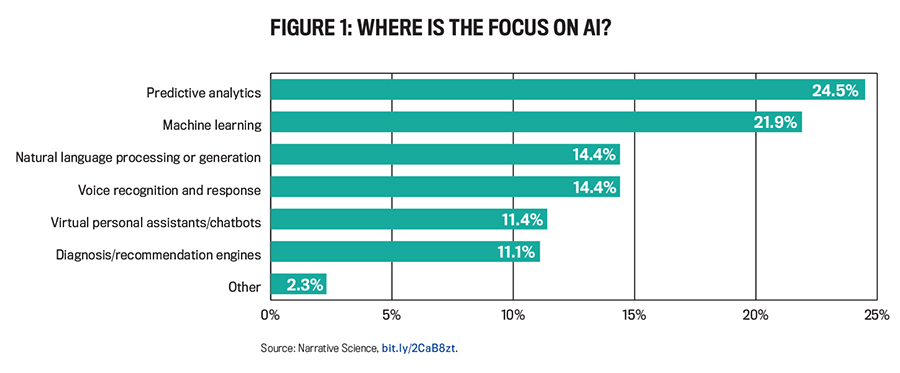

In addition, a new report from Narrative Science and the National Business Research Institute states that 61% of businesses said they implemented AI last year. That number represents a drastic increase from just 38% in 2016, demonstrating that AI may no longer be the way of the corporate future but its present. Of these businesses, the majority are using AI with a focus on predictive analytics, machine learning, or natural language processing (see Figure 1).

AI IS HERE

In addition to boards of directors, large CPA firms, including most of the Big 4, have made substantial investments in AI while they look to cut human time spent on complex audits and other data-analytics-based engagements. A recent article in Forbes, “EY, Deloitte And PwC Embrace Artificial Intelligence For Tax And Accounting,” took a closer look at how each company was utilizing AI in its practices. The organizations were eager to highlight many successes and efficiencies achieved by their early investments in AI.

Both EY and Deloitte use natural language processing for document review, including examining high volumes of contracts related to business unit sales and reexamining tens of thousands of client leases in determining compliance with new accounting lease standards. In both examples, what may have previously taken months is down to only taking weeks. Additionally, EY is using machine learning to help analyze and identify potentially fraudulent transactions, while Deloitte uses natural language generators in its tax practice to provide targeted financial advice.

While boards of directors and CPA firms alike must remain innovative, the question is how these technological advances will affect the success of SOX and other reforms that increased attention in areas previously neglected by executives and auditors. Is human reliance on AI setting the stage for more or fewer financial scandals?

AI systems and their associated algorithms aren’t inherently intelligent, but, rather, they institute machine learning developed over time by analyzing large volumes of repetitive data. A recent report by the Institute of Chartered Accountants in England and Wales (ICAEW), Artificial intelligence and the future of accountancy, outlines a framework for embracing opportunities created by machine intelligence. It asks three important questions: What is the long-term vision for AI and accountancy? How do artificial and human intelligence work together? How are accountants using AI capabilities?

Indeed, machines have substantial benefits over humans in areas such as processing large volumes of data quickly, providing consistency in decision making, and identifying complex or changing patterns in data much faster than individuals. AI also provides opportunities to reduce human errors, produce better predictive or forecasted results, and increase profits through specifically identified AI initiatives.

Yet there are still many limits to AI that can’t be ignored, specifically as it pertains to financial results from companies in varying industries, locations, and sizes. AI systems today still aren’t very flexible, and they require millions of data points to analyze before producing any systematically accurate results. The questions must be repeatable for the machine to generate learning, so unique accounting or corporate instances may be unreliable in the short term to produce accurate or valuable data. Early reliance on these results could prove extremely problematic. In addition, not all questions necessary to be examined by stakeholders in the matters of fiduciary responsibility and due care are quantitative or involve specific data to be analyzed at all. Of course, in the big picture (see Figure 2), the opportunities also come with limitations and risks.

DRAWBACKS AND CHALLENGES

One negative aspect for people has been the replacement of human capital by software as programs become more efficient. A recent article in The Wall Street Journal, “The New Bookkeeper Is a Robot”, indicated that in 2004-2014 alone, the median number of full-time employees in the finance departments at big companies declined 40%, from 119 employees for every $1 billion of revenue down to approximately 71. Publicly traded companies such as Verizon Communications Inc. and GameStop Corp. are among those that have made substantial software investments, allowing them to automate many accounting and financial functions previously performed by additional employees. Verizon alone reduced costs related to its internal finance department by 21% in 2012-2015.

As time passes, more and more revelations are being made pertaining to the risks and unintended consequences of AI as well (see “Caution: AI at Work” at end of article). The main risks include human biases and errors unintentionally developing in the technology itself. For instance, an image-recognition software built by a University of Virginia professor learned to exhibit sexist views of women (bit.ly/2STLb1C). Another study, this time by an assistant professor at the University of Massachusetts, Amherst, showed that AI systems learned to exclude some African American individuals from data sets based on vernacular (bit.ly/2XKq3OF). Though these are only two examples of many that exist detailing direct bias by AI machine learning systems, what happens when the bias that develops pertains to increasing profits, meeting or exceeding forecasts, or increasing stockholder returns? Legal issues that could have ramifications under SOX have also begun to emerge.

AI’S RAMIFICATIONS

One of those legal issues involves who is ultimately responsible for decisions made by AI. Swiss artists recently created an automated online shopping robot and asked it to spend $100 in bitcoin each week as part of an art exhibit. The program was charged with purchasing items on the dark web marketplace, which contains both legal and illegal goods. Among other items, the robot purchased a baseball cap with a hidden camera, a pair of Nike basketball shoes, a fake Louis Vuitton handbag, 200 cigarettes, a set of fire-brigade-issued master keys, and 10 ecstasy pills (bit.ly/2Cbo6Sg). Who is at fault for obtaining the illegal goods, the artists or the robot?

The same question must then be addressed when it comes to accounting fraud or financial scandals. Can a robot or computer be fined or imprisoned when that fraud goes undetected or, worse, when the system learns to become complicit like humans have in the past? Is the individual still ultimately going to be held to the highest standards of fiduciary responsibility and due care even in those instances where decisions and analyses were executed by AI systems?

Currently, the legal system has only found personal injury liability for software developers where those developers were negligent or foresaw imminent harm. In Jones v. W+M Automation, Inc., which involved a worker being seriously injured by a robotic loading system, the defendant was found not liable simply because the manufacturer had complied with regulations. With AI learning, there may be no fault by humans in failing to identify specific frauds or improprieties and no foreseeable injury based on implementing such software. Current tort law may assert no liability for the developer or purchaser of those systems.

Even in instances of fraud, as AI systems continue to adapt and learn on their own, courts may be forced to address these issues and determine if users themselves can be found liable in personal injury cases based on new or expanded agency law. Laws of agency as they exist now may not apply. Laws of agency (or agency law) revolve around the idea that one individual, the principal, authorizes a second, known as the agent, to interact with a third party on their behalf. When such agency relationship exists, the principal is ultimately the responsible party when and if the agent causes any harm or injuries to the third party. It could be argued that once AI software begins thinking or making decisions on its own, the agency relationship by law becomes strained or, in fact, is broken completely. If that’s the case, how can anyone be held responsible?

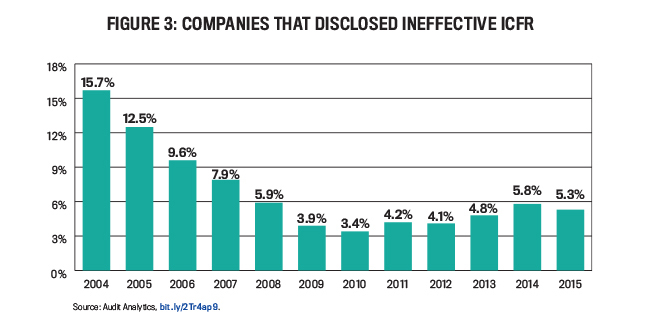

On top of it all, some wonder if the impact of SOX is already starting to wear thin as individuals potentially become complacent once again or if implementation of AI has lessened the focus on important internal factors such as internal controls. According to the Audit Analytics study “The Impact of SOX on Financial Restatements”, the number of companies that have disclosed ineffective internal control over financial reporting (ICFR) declined drastically in the years after implementation of SOX 404(b), though that percentage has been on the rise again recently (see Figure 3).

There’s no doubt that AI should and will play a large role in corporate finance and accounting departments and at CPA firms, but if AI can develop racist, sexist, or other biases, could it also develop greed or compromise ethically to produce positive results? Regardless of technological advances, auditors, directors, officers, and CEOs alike must continue to evolve with the times. These individuals must remain professionally skeptical when leveraging AI as a cost-effective venture or time saver over human examination.

Professional skepticism is required by auditors in exercising due professional care within their work under AU Section 230.07. The section states, “Professional skepticism is an attitude that includes a questioning mind and a critical assessment of audit evidence. The auditor uses the knowledge, skill, and ability called for by the profession of public accounting to diligently perform, in good faith and with integrity, the gathering and objective evaluation of evidence.” The question must be raised as to how objective CPAs are in basing their opinions on information or evidence that AI has examined (but that they haven’t).

The report from the ICAEW, for instance, provides many positive reasons and examples for expanding the use of AI in accounting but also focuses heavily on the evidence of better results from human-computer collaboration. The critical eye of the auditor or CEO will always be instrumental in preventing fraud, regardless of how intelligent machine systems may become, and professionals, regulators, and the entire world may need to realize that.

Auditors, accountants, and executives must be prepared for the continued emergence of AI in the financial marketplace. That preparation needs to include understanding AI basics, the roles that humans still can and should play, and AI risks and opportunities. To avoid a major step backward after the implementation of SOX, we must educate ourselves on specifically which areas those should and shouldn’t be.

CAUTION: AI AT WORK

AI systems depend on neural networks capable of learning. Without transparent algorithms, the learning can be unsupervised within, essentially, a black box.

Interpretability

2108. Harvard professor Margo Seltzer warns of an AI failure where a computer model misreads an observed result as a cause. She cites a computer study of three-quarters of a million patients with pneumonia. One conclusion of the model noted that asthma patients were less likely to die of pneumonia—the data showed their asthma protected them. Seltzer explained the dangerous misinterpretation: “If you show up at an ER and have asthma and pneumonia, they take it way more seriously than if you don’t have asthma, so you’re more likely to be admitted.” The algorithm didn’t know about the more aggressive treatment. The problem is called “interpretability.”

Robots Learn to Lie

2009. An experiment in Lausanne, Switzerland, fitted 1,000 small robots with a sensor, a blue light, and programming to seek out good resources (“food”). When one located the food, it turned on its light to signal others to come over. Points were assigned for finding, remaining with, and signaling, with negative points for hanging around negative stimuli (“poison”) or for being crowded out away from the food by others. After each trial, the best seekers continued on. After 500 generations, 60% evolved to keep their light off when they found the good resources, keeping it to themselves, and 33% of the robots “evolved to actually look for the liars by developing an aversion to the light; the exact opposite of their original programming.”

Fatal Autonomy

2018. The first autonomous vehicle-related pedestrian death was recorded when an Uber SUV in autonomous mode with a human safety-driver aboard made the decision not to react when the car’s sensors detected the pedestrian. The Uber autonomous mode had disabled the Volvo’s factory-installed automatic braking as it was designed to do, according to the National Transportation Safety Board (NTSB) preliminary report.—Michael Castelluccio

April 2019