In June and July 2019, sponsored by SAS, the group surveyed 2,280 respondents from MIT Sloan Management Review readers, including analytics experts, practitioners, consultants, and academics, and three areas were identified as changing with the efforts to implement AI. Those are organizational culture, technology strategy, and technology governance.

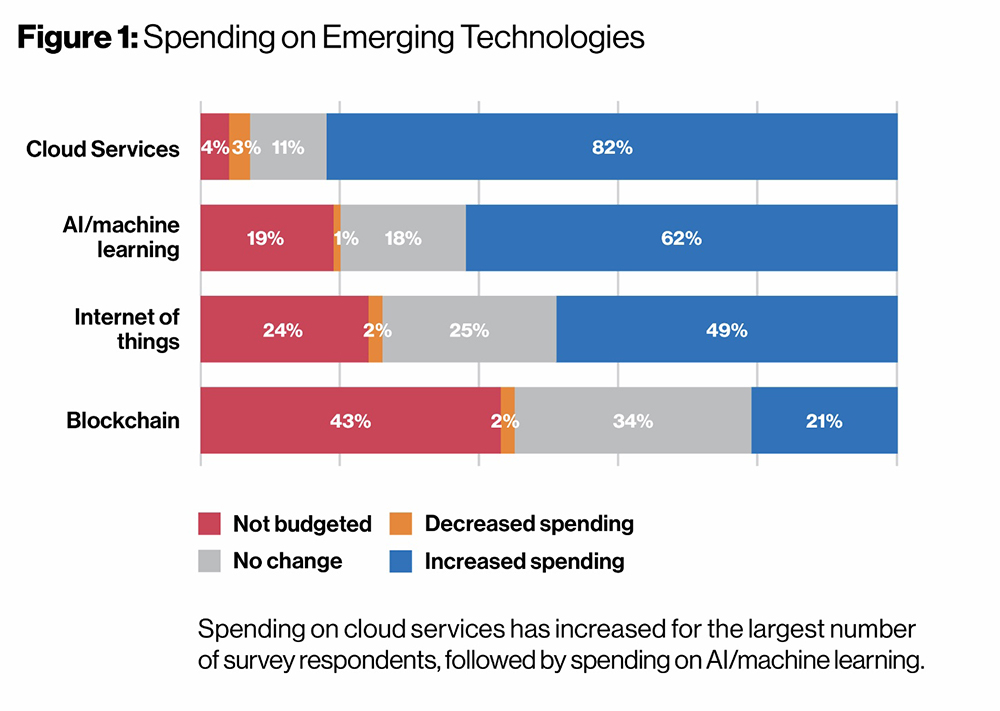

Most of the respondents indicated they are in early stages of considering AI. Only 5% said they were implementing it widely across the organization; 18% were installing AI in a few processes; 19% were piloting AI projects; 13% were in the planning stage; a predominant 27% were investigating possible adoption; 14% said they weren’t adopting or considering it; and 4% said they didn’t know. Current spending increases on the most popular new technologies provide a more nuanced map of where they all are now. The biggest investments are in cloud services, but AI and machine learning have a respectable 62% increase.

Image Courtesy MIT SMR Connections. Click to enlarge.

Image Courtesy MIT SMR Connections. Click to enlarge.

CULTURE

AI requires a long-term investment and, consequently, a long-term mind-set. It requires more of the top leaders, and that will cause more organizational change than other emerging technologies. The writers of the report explain, “This means that organizational leaders need to ensure that traditional silos don’t hinder AI efforts, and they must support the training required to build skills across their workforce.”

Peter Guerra, North America chief data scientist at Accenture was interviewed for the report, and he warned against seeing AI as the latest new thing that will offer a quick payback. “It should be something that drives everything you do, meaning it underpins everything that you do.”

Ray Wang, principal analyst at Constellation Research added, “AI requires a long-term philosophy. You have to understand that you’re going to make this long-term investment with an exponential payoff toward the end. Leaders often don’t understand how to do that, so they keep making short-term decisions for earnings per share instead of thinking about the long-term health of the company.”

The importance of data sets to AI requires a commitment from top leadership to manage data as a key asset. Melvin Greer, chief data scientist for the Americas at Intel writes in the report, “Being able to treat data as an enterprise asset means that there is somebody whose primary job is to understand where the data is and how it can be used to further enterprise mission or goals.”

This might be a stretch for some. Astrid Undheim, vice president of analytics and AI at the Oslo-based Telenor Group adds, “If we think about the big barrier to really succeeding with AI, the big job that needs to be done that executives may not truly understand is the need for good quality and high volumes of data. AI and prediction models often need completely different sets of data than what we have had before.”

The majority of those surveyed said they expected AI to increase activities that “bridge functions and disciplines across the organization.” That means AI should shape a more connected organization.

DEMANDS ON IT

AI isn’t plug-and-play, setting it up and only checking when alerts come through. The demands placed on IT go well beyond the training and deployment. Machine learning and AI algorithms are made to change as they learn and improve. “You’re always collecting data, and you’re always refining the model. This isn’t something static,” writes Wang. “You also have to make sure that once you train the system, you can also ‘unlearn’ the system. You have to be able to take corrective action...if a pattern that’s assumed to be correct is incorrect.” It’s different from a typical software development. Another source of pressure for the IT group is the need to manage data holistically and proactively across the entire organization.

Also, AI development requires closer collaboration between business and IT functions than in more traditional application development projects. This cross-functional cooperation needs to begin sooner, to be more extensive, and to last longer. The business experts have to get smarter about how technology works, and the technology experts need to become more astute about business. One commentor in the report explains, “Historically, business leaders wouldn’t be bothered about whether this is in that database or this database. But now they do, and it does matter.” The organization’s data will become a shared responsibility.

RISK AND ETHICS

The survey verifies that the responsibility for managing AI risk is as likely to belong to the CIO, CTO, or CEO, as it is to be shared. One direction change that follows is that “very few organizations appear to be assigning accountability along traditional lines of risk management, to legal or financial executives.” Those already involved in implementing AI lean toward shared responsibility, but very few overall have plans for remediating damage that might be caused by AI applications.

The report writers assume that “granting inanimate technology a kind of agency and autonomy—and even some degree of personality as in the cases of automated personal assistants—does carry real potential risks that organizations must manage.” Complicating that is the reality that learning systems are designed to learn and therefore constantly change.

A baseline scale of whether respondents found AI reliable, with 10 being the most reliable, 1 the least reliable, indicated an overall respondent rating of 7 for personal AI technology, and a 6 for AI that is used in their companies.

In general, there was no wide variances on the concerns over the five world regions, with two exceptions. Those surveyed in Europe had significantly less trust in AI systems used in their personal lives, and North Americans were much more concerned about bad customer experiences due to AI.

There were six risks that were rated with high concern. The question was, How worried are you that AI may:

- Deliver inadequate ROI.

- Produce bad information.

- Be used unethically.

- Support biased, potentially illegal decisions.

- Produce results that humans can’t explain.

- Be too unpredictable to manage adequately.

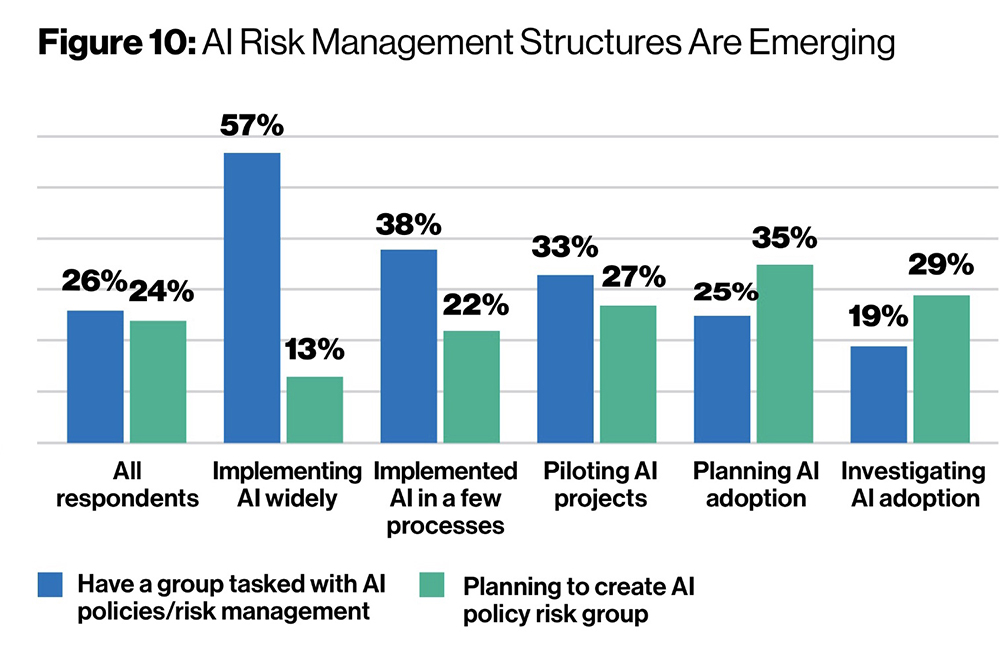

There’s a need for new risk management structures with AI, and about half of the respondents are acting to create organizational structures that can provide oversight “that seeks to understand and verify how models function, mitigate [data] bias, and anticipate unintended consequences.” The graph shows the level of planning by relative AI activity.

Image Courtesy MIT SMR Connections. Click to enlarge.

Image Courtesy MIT SMR Connections. Click to enlarge.

One interesting new dimension in risk management is “explainability.” The report writers define the term: “An issue that is new to many who consider risk management is adequate explainability—the ability to identify the key factors that a model used in producing results, which may be recommendations in a decision-support system or actions in an automated process.”

They say it’s important for regulatory compliance in use cases like credit approval or hiring, where bias isn’t permitted, and they point out that it’s already in data regulations in the GDPR (European Union’s General Data Protection Regulations) and is emerging elsewhere. Explainability can be impossible when the data manipulation is done inside a black box system with no access to internal workings.

On the larger question of setting up a separate ethics-focused AI review board, only 10% said they’ve established one and 14% are planning to have one. A major issue for this kind of board would be the problem of data bias. “Ultimately,” the writers claim, “assembling similarly diverse data science teams, and ensuring that ethical guidelines are followed, will be essential to any organization if AI is to gain the trust needed to deliver on its promise.” The report ends with a list of commissions and institutions that offer frameworks for AI and data ethics.

The research report is available for download from the SAS Resource Hub or the MIT Sloan Management Review website.