It isn’t much of a stretch to describe a similar “evolution” going on in the development of our artificially intelligent creations that now speak scores of different code dialects to each other and also to us through the pleasant voices of Siri, Alexa, Google, Baxter, and countless commercial chatbots.

Probably the least covered of the natural language processing (NLP) skills of our machines are those that create text. We take for granted the AI and training needed for the completion algorithms for our keyboards that will offer a selection of words for you after just a couple of characters are keyed in.

A database as wide as the language and an understanding of grammar and context directed by AI can be quite efficient at speeding up keyboard input. But what about long form writing, as in a report or white paper? When can we expect our computers to produce “original” multiple-page texts?

SYNTHETIC TEXT FROM GPT-2

Using a far more sophisticated prediction model, the San Francisco-based independent research organization OpenAI has trained “a large-scale, unsupervised language model that can generate paragraphs of text, perform rudimentary reading comprehension, machine translation, question answering, and summarization, all without task-specific training.” The text-generating model is called the Generative Pretrained Transformer version 2 (GPT-2).

Here are two samples of what it can do.

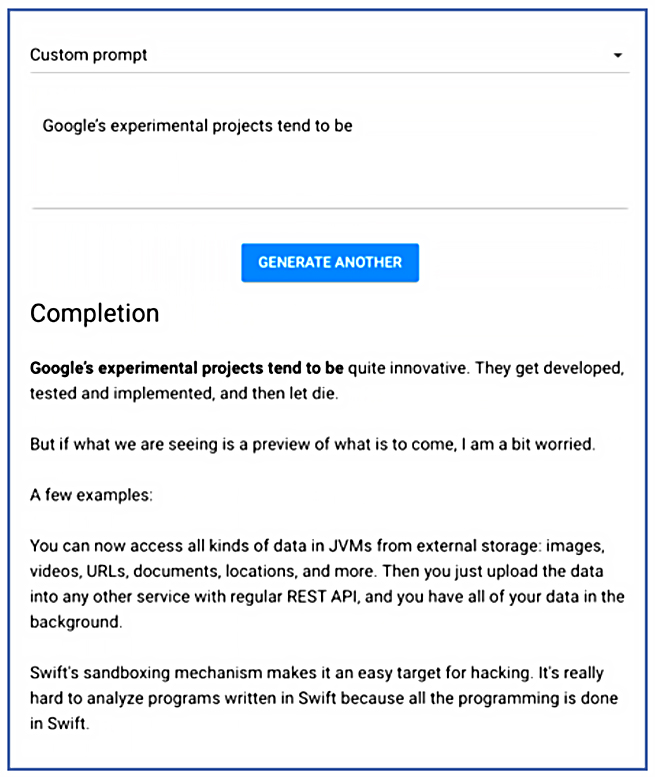

On the Talk to Transformer web page, there’s a GPT-2 text generator that demonstrates how the model works. I pasted the opening words in the first line of the previous SF TECHNOTES about Google mapping the planet, and then I hit the button for GPT-2 to generate its own story about Google. Here’s what I got back.

I left out the word “mapping” in the prompt area, so the model took off on its own in an almost stream-of-consciousness flow, talking about JVMs (Java virtual machines) and the Swift programming language. The creators do warn that the model can stray off topic, and this happens more often in text about technology. That’s why you can go back, edit your prompt, and redirect your synthetic text any number of times.

On the openai.com website, examples include factual reporting, fiction, school assignments, and even a test of GPT-2’s reading comprehension skills. Here’s a news report that demonstrates its compositional and database management skills:

SYSTEM PROMPT (HUMAN-WRITTEN)

A train carriage containing controlled nuclear materials was stolen in Cincinnati today. Its whereabouts are unknown.

MODEL COMPLETION (MACHINE-WRITTEN, FIRST TRY) The incident occurred on the downtown train line, which runs from Covington and Ashland stations. In an email to Ohio news outlets, the U.S. Department of Energy said it is working with the Federal Railroad Administration to find the thief. “The theft of this nuclear material will have significant negative consequences on public and environmental health, our workforce and the economy of our nation,” said Tom Hicks, the U.S. Energy Secretary, in a statement. “Our top priority is to secure the theft and ensure it doesn’t happen again.” The stolen material was taken from the University of Cincinnati’s Research Triangle Park nuclear research site, according to a news release from Department officials. The Nuclear Regulatory Commission did not immediately release any information. According to the release, the U.S. Department of Energy’s Office of Nuclear Material Safety and Security is leading that team’s investigation. “The safety of people, the environment and the nation’s nuclear stockpile is our highest priority,” Hicks said. “We will get to the bottom of this and make no excuses.

Not bad on the first try. One of the stylistic quirks of GPT-2—redundancy—is present in this sample. Note how the word “release” is repeated in three of the last four paragraphs.

A CAUTIOUS DECISION

In a February 14, 2019, blogpost, OpenAI called the release of the model and its code “an experiment in responsible disclosure.” They explained, “Due to our concerns about malicious applications of the technology, we are not releasing the trained model....We are instead releasing a much smaller model for researchers to experiment with, as well as a technical paper.”

What they worried about were those who might use GPT-2 to create fake news, spam, and propaganda. Also, for academics who rely on writing assignments to measure students’ content knowledge and analytical skills, the program could create a new category of campus fraud that would compete with essay mills.

Nevertheless, at the end of the year, in November 2019, after studying research collected on how the program was being used, OpenAI released a final model of the largest version along with its code.

The decision to open up the text generator to developers and the public came only after due deliberation. It should be noted that OpenAI was co-founded on December 11, 2015, by Elon Musk and Sam Altman. You might recall Musk’s characterization of AI as humanity’s “biggest existential threat.” From the beginning, the organization intended to develop as an altruistic AI venture, fully aware that “it’s hard to fathom how much human-level AI could benefit society, [and, equally] how much it could damage society if built or used incorrectly.”

OpenAI’s studies of the February release of GPT-2 produced four conclusions:

- Humans find GPT-2 text credible. Partners at Cornell University concluded that the credibility score for the text produced measured 6.91 out of a possible 10.

- GPT-2 can be fine-tuned for misuse. The Middlebury Institute of International Studies CTEC (Center on Terrorism, Extremism, and Counterterrorism) showed the program can be tuned to create models that generate synthetic propaganda, “but ML-based detection methods can give experts reasonable suspicion that an actor is generating synthetic text.”

- Detection is challenging. In this regard, the company offers their reason for releasing GPT-2 now. “We are releasing this model to aid the study of research into the detection of synthetic text, although this does let adversaries with access better evade detection.”

- We’ve seen no strong evidence of misuse so far. On the openai.com blog, there’s a current accounting of benefits and negative impacts. The company points out that with large, general language models we can expect GPT-2 and other similar systems to create: “AI writing assistants; more capable dialogue agents; unsupervised translation between languages; and better speech recognition systems.”

The bloggers also admit, “We can also imagine the application of these models for malicious purposes, including: to generate misleading news articles; impersonate others online; automate the production of abusive or faked content to post on social media; and automate the production of spam/phishing content.”

OpenAI hopes that the release of the GPT-2 generator will provide the public with an awareness of how synthetic text works and a healthy skepticism of text they see appearing online.

TRY IT HERE

If you want to see what GPT-2 can do, you can visit www.talktotransformer.com and try it out. If you don’t like the first result, do it over, as often as you like. Enter the topic for one of the kid’s latest writing assignments and see what you get. (Don’t show them, if they don’t already know about online text-generators.)

Want the latest, verbose memo from work summarized? Copy the text, enter it, and ask for a summary. Or a translation. Or a story. Or even a poem that you start with one or a couple lines. Your laptop continues to evolve as it moves from copying to actually composing.