Now an AI engineered TTI (text-to-image) application found in programs like DALL-E-2 and Midjourney has become adept enough for the production of AI art and illustration. And it isn’t complicated; you simply type your description (called a prompt) like “Painting, a bowl of roses on a table by the window” and wait 10 seconds. Beginning in January 2021, a number of large companies like Google, OpenAI, and Facebook were creating their versions of text-to-image AI programs for making original art, and the AI art movement has grown rapidly since. The effort now is to make the programs available to all, including those with conventional personal computers.

[caption id="attachment_30632" align="alignnone" width="655"]

Stable Diffusion is a machine-learning model from Stability AI that’s able to generate digital images from natural language descriptions. On August 10, 2022, the London-based company announced the release of their latest models. CEO Emad Mostaque explained, “We are delighted to release the first in a series of benchmark open source Stable Diffusion models that will enable billions to be more creative, happy, and communicative. This model builds on the work of many excellent researchers, and we look forward to the positive effect of this and similar models on society and science in the coming years as they are used by billions worldwide.” If that billions figure for AI art sounds exaggerated, consider the mid-August 2022 announcement from TikTok that it was adding a new effect called AI greenscreen that lets users type in a text prompt to create a background for their video. A text-to-image AI system creates the new background image. James Vincent wrote in a post on theverge.com that “What’s notable about the appearance of TikTok’s AI greenscreen is that it shows just how fast this technology is going mainstream.”

The latest cycle of development for text-to-image AI arguably began in 2021 with the original release of DALL-E by OpenAI. On August 26, 2022, medium.com reported, “A month ago, DALL-E 2 gained one million paid users. Today, Stability AI announced that thanks to the cooperation with the investor, the potential user base [for its Diffusion tool] is 200 million subscribers.” Add the potential millions of Facebook users, and Google, TikTok and more, and billions sounds reachable. Not a surprise then, that PC World has predicted TTI the new killer app for your PC.

Benj Edwards, in a Sept. 6, 2022, arstechnica.com posting titled “With Stable Diffusion, you may never believe what you see online again,” compared the new Stable Diffusion art generator favorably with DALL-E 2 and offered this surprising observation: “Image synthesis arguably brings implications as big as the invention of the camera—or perhaps the creation of visual art itself. Even our sense of history might be at stake, depending on how things shake out. Either way Stable Diffusion is leading a new wave of deep learning creative tools that are poised to revolutionize the creation of visual media.”

HOW TTI WORKS

DALL-E 2 was released by OpenAI in April 2022. The name is a portmanteau of the little Pixar robot WALL-E and Salvatore Dali, the surrealist Spanish painter. To use the program, you’re asked to provide a description called a prompt, and after you type that in, you need only wait 10 seconds for the program to render a drawing, painting, or photorealistic representation of the words. It might seem that the computer just does a word search for images that are then mashed together in the images returned to you, but that isn’t how it works. According to the designers, “Unlike a 3D rendering engine, whose inputs must be specified unambiguously and in complete detail, DALL-E is often able to ‘fill in the blanks’ when the caption implies that the image must contain a certain detail that is not explicitly stated.”

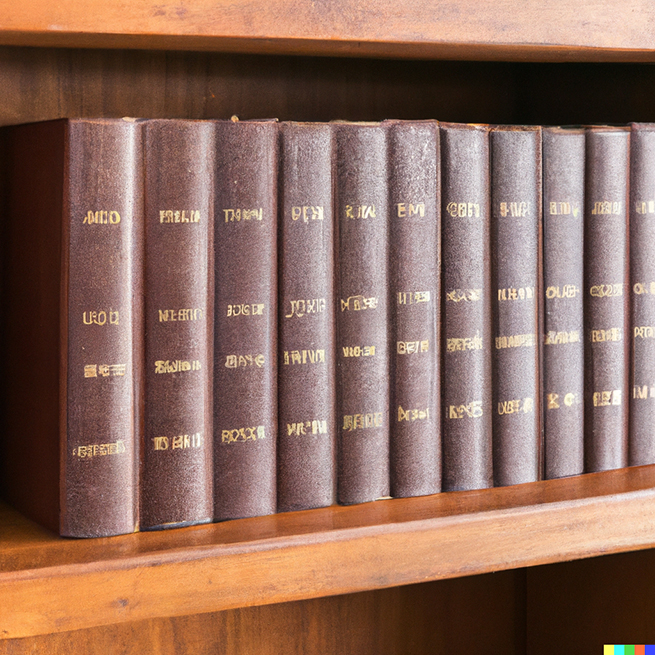

For example, the prompt “Encyclopedia, with writing that is not discernable, on a wooden bookshelf” from Aaron Lucas produced this image on DALL-E.

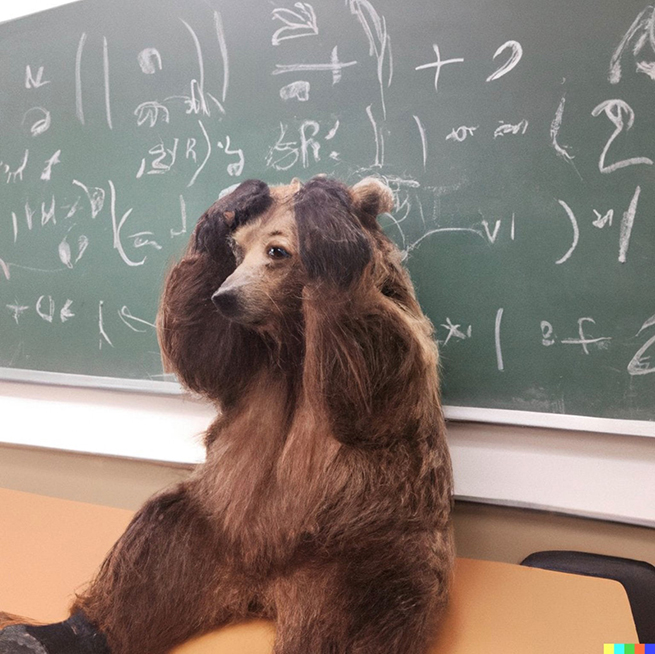

The shelves and the bindings could have been recalled from a large database but look at the writing on the spines. That represents a language that has nowhere existed in our world, so the program invented it to fulfill the prompt’s request for writing that’s “not discernable.” Now consider a prompt that asks for a photorealistic image of a Donatello marble figure holding a Starbucks beverage while reading a graphic novel on a park bench. The search would be endless if it needed to “recall” these images because there are no datasets of Renaissance sculptures in those modern circumstances. But because the system understands who the sculptor Donatello was, what a graphic novel is, and what marks Donatello’s style, it can place all the elements in photorealistic detail, arrange the lighting and background, and have it ready in 10 seconds. It fills in where necessary, and even makes choices about what elements of composition a human would find pleasing. Even prompts as fanciful as “grizzly bear confused in calculus class” have no trouble getting translated to images.

[caption id="attachment_30634" align="alignnone" width="655"]

The DALL-E 2 system uses two AI tools, CLIP (contrastive language-image pre-training) and GPT3 to understand human text. The image is drawn through a process called diffusion, which the company describes as “starting with a bag of dots and then filling in a pattern with greater and greater detail.” These three elements are more easily understood via examples, so here is a 15-minute video review of DALL-E 2 at work DALLE: AI Made this Thumbnail.

PROBLEMS—ETHICAL AND AESTHETIC

If you were allowed to use a TTI AI art generator to create a realistic image of an actual person you might write a prompt putting that person in any number of sordid or illegal situations. To prevent this, the developers coded in filters for propaganda, violent imagery, copyright infringements, deepfakes, and more. There’s a catalog of terms and prompts that aren’t allowed. The newly released Stable Diffusion also has automatic “NSFW” filters and an invisible tracking watermark embedded in the images as well as an ethical use policy that forbids improper uses. But some have observed that the open-source code for the program can be bypassed by someone downloading the open-source code and changing it. These problems remain unsolved and need to be addressed with locked-down solutions.

[caption id="attachment_30636" align="alignnone" width="655"]

Another interesting problem revolves around the ancient question, what is art? This year’s annual art competition at the Colorado State Fair had a controversial winner. Jason M. Allen, owner of the Incarnate Games studio, submitted an AI-generated painting he titled Théâtre D’opéra Spatial (Space Opera Theater), and it won first place in the digital category. Allen used the Midjourney AI generator to make the work, and it was one of the first AI-generated pieces to win this kind of award. After the blue ribbon was hung alongside the canvas, some of the contestants and then a wider group of critics complained that a TTI program produced the work. The controversy reached its peak when the story appeared in The New York Times, Smithsonian magazine, and NPR, and when it lit a blazing colloquy on Twitter with pronouncements including, “We’re watching the death of artistry unfold right before our eyes.”

When he first submitted the work, Allen did tell the committee that he used Midjourney, and there were no objections because the rules for the digital category allow any “artistic practice that uses digital technology as part of the creative or presentation process.” When the first complaints were made, Allen did explain that he had made his own adjustments to the painting, going over 900 iterations of the image, which took him more than 80 hours.

The potential duplicity of TTI AI imagery along with the yet-to-be-defined copyright issues need to be settled soon because this killer app is picking up speed and offering new options for interested users. The question—Is it art if it can be this easy and skills-free?—doesn’t have the same urgent deadline. Don’t expect that debate to be over soon.