That’s what a dictionary would have provided as a definition before founders Sergey Brin and Larry Page tinkered with the spelling in 1998. Now, when you look up “google,” it’s no longer a noun, and the entry reads: “transitive verb: to use the Google search engine to obtain information about (someone or something) on the World Wide Web” (Merriam-Webster). The word is reminiscent of the single-word mantra Think that appeared over doorways and everywhere throughout the early IBM headquarters. If you visit the company’s home page for the tools Google has developed, you’ll find many different iterations of the search dynamic. On the about.google page, there are 81 tools that range widely over Google’s research interests. Among the familiar Search, Maps, Docs, and YouTube, there are applications like Scholar, which “provides a simple way to broadly search for scholarly literature”; Input Tools, which lets you “switch among over 80 languages and input methods [as seamlessly as typing]”; and Tilt Brush, which lets you paint in 3D space with dimensional brush strokes on PlayStation VR, Oculus, or Windows Mixed Reality.

Google Lens, a standout on the list, appeared fairly recently. For doing almost any kind of lookup, this application doesn’t seem to have attracted the attention it deserves.

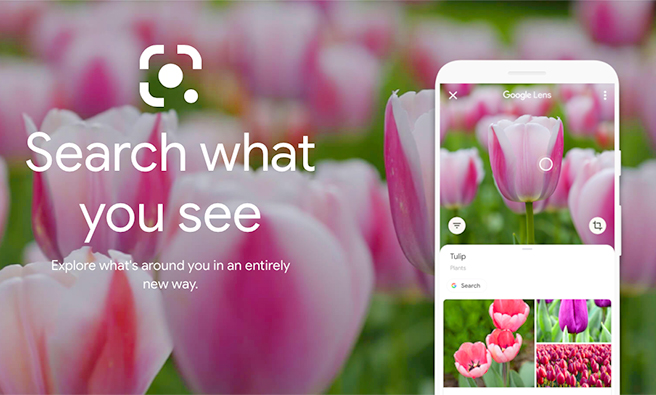

SEARCH WHAT YOU SEE

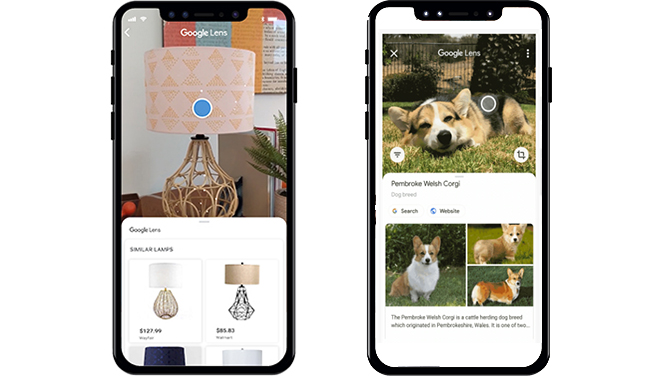

Google Lens is a little more than three years old, and the dramatic expansion of its database has rendered it amazingly precocious. By using the camera on your phone, or images, Lens will attempt to recognize what it sees and then provide relevant information about the object, offering actions that you might want to take regarding that information. If it sees a product, it might offer where it can be purchased. Shopping results are available in 36 countries. Lens can also translate text from most of the languages in Google Translate.

It can also tell you what that plant is and whether local nurseries have it, what breed of dog that is, whether that snake is poisonous, who sculpted that museum piece—even what kind of squash that is and what recipes you might try with it. It can read QR codes and labels. Point it at the Wi-Fi name and password in the receptionist’s window at the dentist, and it will connect your phone to the office’s Wi-Fi.

On the Lens home page (lens.google), there’s a short list of some of the more popular functions, including the thing it does when you show it a menu. “Wondering what to order at a restaurant? Look up dishes and see what’s popular, right on the menu, with photos and reviews from Google Maps.” And there’s the homework help. “Stuck on a problem? Quickly find explainers, videos, and results from the web for math, history, chemistry, biology, physics, and more.” Just open the Lens application, and point your phone at the equation, historical term, image of a cell dividing, or neuron firing.

And even when Lens can’t find a specific reference for the image you’re searching, it can offer suggestions for similar objects that you might find interesting or useful.

On the bottom of the Lens home page are the links for downloading the Google Lens app on your Android phone, Apple iPhone, or as part of the Google Photos app.

TEACHING A CAMERA TO READ

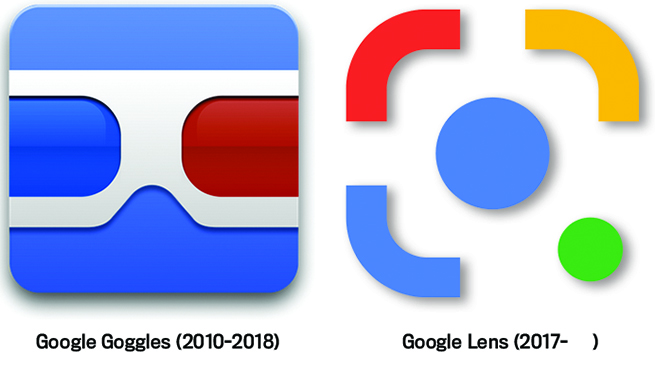

Before Google Lens, there was something called Google Goggles. This was an early attempt at an image recognition app for Google’s Android operating system on mobile devices. Launched in October 2010, it was available until August 2018. If you photographed an historical landmark, Goggles would provide information about the structure. It could recognize books and CDs, add to your contacts information it scanned from a business card, translate foreign text, and even solve Sudoku puzzles. A YouTube preview of Goggles’ capabilities is still online.

The more recent version of the application is called Lens, and it has the advantages of advanced neural networks, AI, machine learning, and a database that has burgeoned. For Google, the machine learning is centered in its open-source TensorFlow learning framework, and the data is the already accumulated, and still growing, tens of billions of facts on everything in Google’s Knowledge Graph. For text and translation, Lens is able to read and provide information and opportunities to use the information. OCR (optical character recognition) is the additional add-on contributing reading skills to the system.

The horizon for Google Lens can only widen as hardware, software, and networks get smarter. The significance of using a visual approach to search was explained by Google Vice President Aparna Chennapragada in a blog post titled “The era of the camera: Google Lens, one year in” (December 19, 2018). At the beginning of the post, she reminds us, “We are visual beings—by some estimates, 30 percent of the neurons in the cortex of our brain are for vision. Every waking moment, we rely on our vision to make sense of our surroundings, remember all sorts of information, and explore the world around us.”

Chennapragada’s expectations for Google Lens certainly seem reasonable. In the blog, she writes, “As computers start to see like we do, the camera will become a powerful and intuitive interface to the world around us; an AI viewfinder that puts the answers right where the questions are—overlaying directions right on the streets we’re walking down, highlighting the products we’re looking for on store shelves, or instantly translating any word in front of us in a foreign city. We’ll be able to pay our bills, feed our parking meters, and learn more about virtually anything around us, simply by pointing the camera.”

If you aren’t already a user of Lens, take a look. It makes formal searches much easier and often more interesting.