The images on screen actually move as you adjust your gaze to accommodate what you’re trying to see. This new ELF-SR1 monitor requires no glasses or viewing apparatus because the monitor has a micro camera that tracks your eyes and sends separate images to each.

What’s perhaps even more interesting than this unique technology from Sony is the long, winding path 3-D technology has taken to get where it is today.

A LONG ROAD

Some technologies are just very slow to develop. Speech recognition, for example, demanded great patience from its users and developers over the 50 years that have taken it from a DARPA-funded struggle to get a computer to master a vocabulary of 1,000 words in 1971 to our glib digital assistants today like Siri, Alexa, and IBM’s Jeopardy champion Watson.

Yet few tech laggards can compare with 3-D. It’s growth curve has been almost imperceptible at times, ranging back to a first 3-D photograph in 1849 (David Brewster’s stereoscope), followed by the first 3-D theatrical film in Los Angeles (1922), a long period of flat-line abandonment, a “golden age” in the early fifties featuring many movies and the first 3-D comic book (Mighty Mouse, 1953), followed by a second abandonment, and then a rebirth for film (1985-2003), and a general but scattered resurgence in entertainment, computer and medical graphics, and more.

That’s a developmental trek of 171 years with few history-changing moments that might have propelled it. What should have been as definitive a development as was translating monochrome early imagery systems to color didn’t apply to the efforts to recreate the way we see dimension in our everyday lives.

RELATIVELY SIMPLE SCIENCE

The technical problem at the heart of 3-D was solved on paper with the original 3-D photographs during the rule of Queen Victoria in England. The question was how to create an image that can simulate the way we see objects in the real world. The answer was to replicate what we do. Take two individual photos of a subject from slightly different angles, and then create a device that shows those images separately, one to one eye and the other to the other eye. Then you let the primary visual cortex at the back of the brain combine them. The result is an image that has binocular 3-D that looks like it has depth.

The mechanics of the human process is only partially understood. The separate images on your retinas are the same image, just 54-74 mm. apart—that’s the average distance between peoples’ pupils. What the neurons in the back of your head do with these two pictures isn’t exactly clear. The resulting illusion, if it is an illusion, is what allows us to judge the flight of a baseball well enough to thrust our hand into the space it’s headed toward and catch it.

It’s what allows us to drive a car safely and what impresses us with the vastness of the woods we hike through. How the neurons in the anterior lobe do this isn’t clear. Nor is depth perception very effective beyond 18 feet. At that point, we start relying on motion, disparity, contrast, and other clues to sense the separations of things.

The Sony Spatial Reality Display doesn’t get bogged down in this processing enigma. Rather, it has a camera that doggedly focuses on both eyes, tracks them continuously across its entire field of view, and sends a stream of two images. On the monitor, your field of view allows you to move 25 degrees left or right, 20 degrees up, and 40 degrees down, and when you do that, you get to see what’s on top and on the sides of the objects.

The monitor follows and sends a different image to each eye. All images courtesy Sony

The monitor follows and sends a different image to each eye. All images courtesy Sony

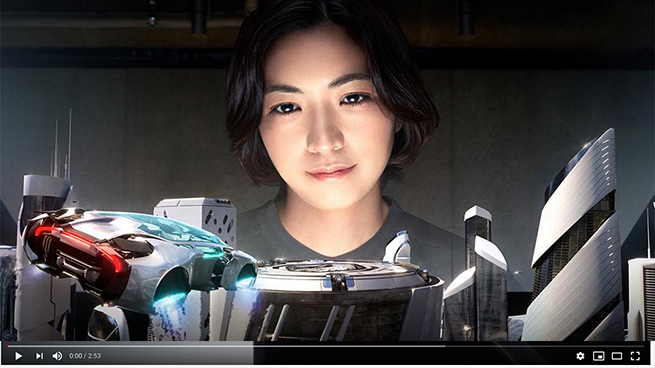

Here’s how Sony describes the process: “Content extends deep within the display from any viewing angle. Simply moving around—up or down, side to side—makes you feel like you’re interacting with the content right in front of you. The micro optical lens is positioned precisely over the stunning 15.6-inches (diagonal) LCD display. This lens divides the image into the left and right eyes allowing for stereoscopic viewing with just the naked eye.” Examples of what that looks like can be seen in the YouTube video below.

Click on photo for video.

Click on photo for video.

APPLICATIONS

The uses for the ELF-SR1 can include engineering, filmmaking, and graphic and game design. For any manufactured product, it’s helpful in both the design stage and in presentations of the finished product.

Frantisek Zapletal of the Virtual Engineering Lab for Volkswagen Group of America told Loz Blain of newatlas.com what plans his company has for the monitors. “At Volkswagen, we’ve been evaluating Sony’s Spatial Reality Display from its early stages, and we see considerable usefulness and multiple applications throughout the ideation and design process, and even with training.”

THE KILLER APP?

Although Sony’s 3-D monitor might not be ready yet for home use, there are other unusual 3-D applications showing up in other common environments. Some luxury car manufacturers, including the makers of the Genesis GV80 SUV, have recently committed to adding a glasses-free autostereoscopic 3-D dashboard display from Continental.

Continental’s first 3-D dash display. Image courtesy Continental

Continental’s first 3-D dash display. Image courtesy Continental

The Babenhausen, Germany company, Continental, has included on its dashboard display three-dimensional scales, pointers, and objects such as a stop-sign warning that appears in the driver’s line of sight. The technology is based on parallax barriers, which are slanted slats that divide the image into two slightly offset views for the right and left eye to create stereoscopic 3-D. An interior camera watches the driver’s line of sight and adjusts the 3-D views to the precise position of the driver’s head.

Frank Rabe, the head of the Human Machine Interface business unit at Continental, has said, “With our volume-production display featuring autostereoscopic 3D technology, we are raising human-machine interaction to a whole new level and laying the foundations for intuitive communication in the connected cockpit of tomorrow.”

Concerning the problem of the display becoming a dangerous distraction, there’s a monitoring/warning system that alerts the driver when attention lingers too long on the display. Ironically, that’s a problem that might not have existed had 3-D developed more quickly and had the applications become more diverse and common. After all, the driver with such a display sees all the rest of what’s in front of him in 3-D—the steering wheel, directional levers, visors, his phone in the cup rest—it’s the way we see every dimensional thing that’s close. But 3-D is still unusual enough to be fascinating.

Is the dashboard display a killer app for 3-D? Not likely, but the Sony monitor might be closer to something that could propel the technology. Perhaps we should ask for a prediction from Siri.