SPEAKER SMARTS

You can look over the technical specs for these DPAs, but you won’t find a category for native IQ—not yet. It would make sense to come up with a comparative measure of efficiency, as we do with lumens for light fixtures and clock speeds in GHz for processors, but that smarts quotient hasn’t been defined yet. Fortunately, Eric Enge, a search engine optimization expert and the founder of Stone Temple Consulting, worked out his own standardized test that he administers periodically to the voices in the box.This is the second year that Enge has published the results of his testing at the end of April. Five devices were tested including Alexa (Amazon), Cortana (Microsoft), Google Assistant (both on Google Home Speaker and a smartphone), and Siri (Apple). Google got two scorings in the report because the software performs differently on home speakers and smartphones.

Enge’s 2018 test consisted of an exhaustive list of 4,942 questions. If that doesn’t seem to qualify as exhaustive, compare that to the standardized SAT’s annually administered to high school students. The kids put in a grueling four-hour Saturday morning answering 154 questions and an essay.

QUERIES NOT TASKS

Enge defines DPAs in a way that establishes the parameters of the testing. “A digital personal assistant is a software-based service that resides in the cloud, designed to help end users complete tasks online. These tasks include answering questions, managing their schedules, home control, playing music, and much more.”

From there, he looked for an answer to, “What do DPAs do most?” From a 2017 survey conducted by comScore of U.S. households, he posted the results in his report.

- General questions (60%)

- Weather (57%)

- Stream music (54%)

- Timers/alarms (41%)

- Reminders/to-do (39%)

And then followed the remaining 10, in this order: calendar, home automation, stream news, find local businesses, playing games, Bluetooth audio, Audiobooks, order products, order food/services, and podcasts.

To determine the smartest, Enge concentrated the questioning on “general questions,” not actions taken by the services. The group took special notice of the following five qualities of response:

- Did the assistant answer verbally?

- Was the answer from a database (like Knowledge Graph)?

- Was the answer from a third-party source (“According to Wikipedia. . .”)?

- How frequently did the assistant fail to understand the question?

- Did the device answer with an incorrect response?

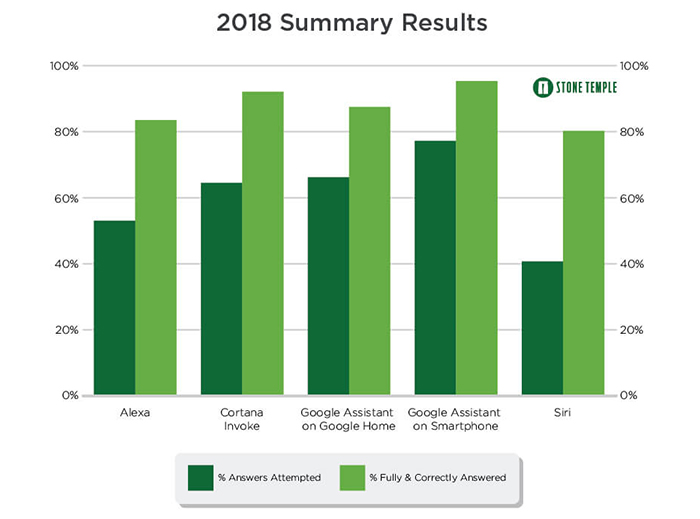

And after nearly 5,000 queries for each of the five DPAs were processed, the results looked like this:

Click to enlarge. Stone Temple Consulting (www.stonetemple.com/digital-personal-assistants-study/)

The bar representing % Answers Attempted “means that the personal assistant thinks that it understands the question, and makes an effort to provide a response.” Not included in this category are the “I’m still learning” or “Sorry, I don’t know that” replies. Also not qualifying for answers attempted are those instances when an answer is given but the query was heard incorrectly. Those responses Enge chalks up to limitations in language skills, not knowledge.

An important qualifier for the correct answer is the word “fully.” The answer has to be direct and complete. Enge’s example of something close but not good enough is this: “If the personal assistant was asked how old is Abraham Lincoln, but answered with his birth date, that would not be considered fully & correctly answered.”

The four top-level conclusions Enge and his partners arrived at this year include:

- Google Assistant still answers more questions than the others and has the highest percentage of correct responses.

- Cortana finished in second place for highest percentage answered and answered correctly.

- Alexa has made the highest gains over last year’s results, answering 2.7 times more questions than in 2017.

- All of the competitors have made important progress in “closing the gap with Google.”

SCORE PROFILES

Enge includes in its report four other interesting tables profiling the five contestants’ scores. The first maps a comparison of the Percentage of Fully & Correctly Answered in a Year over Year (2017-2018) comparison. Since last year, only Cortana Invoke increased its accuracy (from 86.0% to 92.1%). Alexa shows a slight decrease, but considered alongside its 2.7X increase in attempted responses, that’s more than acceptable.

Three other tables cover the number of incorrect responses, the percentage of responses that featured snippets, and the number that are jokes. You can look at these along with numerous specific examples of responses on the report at www.stonetemple.com/digital-personal-assistants-study/.

OTHER APPS AND SERVICES

The report concludes with a reminder that one important area isn’t covered by the IQ testing of the four, and that’s the overall connectivity of the devices with other apps and services—thermostats, home lighting, security systems, locks, etc. Enge suggests, “You can expect all four companies to be pressing hard to connect to as many quality apps and service providers as possible, as this will have a major bearing on how effective they all are.”

As a closing footnote on that issue, Google announced last week that its Assistant DPA can now connect and control more than 5,000 smart devices from 1,500 manufacturers. A Google spokesman said that Amazon’s Alexa is able to control 4,000 devices from 1,200 manufacturers, so for now, the Google Assistant has passed up Alexa in the smart-home device competition. The announcement was made in preparation for this week’s I/O Google annual developer conference in Mountain View, Calif.