No surprise then that this year’s list offers two significant AI entries: generative adversarial networks (GANs), which pit AI systems against each other, and cloud-based AI, which makes deep-learning algorithms available to many more users.

Here are seven of this year’s inventions and projects that the Technology Review editorial staff thinks will have a profound effect on our lives.

3-D METAL PRINTING

The 3-D printer has become affordable, more precise, and easier to use since its invention in 1984. It was supposed to launch a manufacturing revolution, but what has been missing is the ability to forge objects in more durable materials like metals.

In 2017, that began to change as researchers at the Lawrence Livermore National Laboratory created a method of 3-D printing that they say can produce stainless-steel parts twice as strong as those traditionally manufactured. Massachusetts start-up Markforged launched the first 3-D metal printer that sells for less than $100,000, and another Boston-based company Desktop Metal “began to ship its first metal prototyping machines in December 2017. It plans to begin selling larger machines, designed for manufacturing, that are 100 times faster than older metal printing methods.”

And it isn’t just the startups. GE has been using 3-D printing for some of its aviation products. This year, the company plans to begin sales of a metal printer that’s “fast enough to make large parts.”

MACHINE LEARNING TOOLS IN THE CLOUD

What better way to spread the AI revolution than to enlist the giants and their delivery systems. Amazon, Google, and Microsoft have all decided to open the gates for not just the tech industries, but to all who need the tools and the consultancy services to get started.

Amazon leads in cloud AI with its AWS (Amazon web services) division, while Google has TensorFlow, an open-source library of AI resources for building machine-learning software, and its recently announced Cloud AutoML, which Technology Review describes as “a suite of pre-trained systems that could make AI simpler to use.” Microsoft has Azure, an AI-enabled cloud platform, and it will team up with Amazon to provide users with Gluon, “an open-source, deep-learning library that is supposed to make building neural nets—a key technology in AI that crudely mimics how the human brain learns—as easy as building a smartphone app.”

DUELING NEURAL NETWORKS

Computers handle math and logic very well, but the problem of object recognition, visually and audibly, has been slow in development. AI is getting better at identifying objects, but it still stumbles when asked to generate images on its own. That requires imagination.

Ian Goodfellow, a PhD candidate at the University of Montreal came up with one solution, which has come to be known by the acronym GAN (generative adversarial network).

The strategy is simple. Take two neural networks, train them on the same data set, and then have them compete head-to-head, or rather, AI to AI. The one network that is called the generator creates variations on pictures it has seen. It generates, for instance, an image of a dog with wings. The second AI network, called the discriminator, is told to identify whether that image is like the ones it has seen or is a fake, created by the generator.

Given enough practice, the generator can become adept at creating images that the discriminator can’t discern as fakes. What has happened is that the generator has learned how to recognize and how to create images realistic enough to stump the discriminator.

The editors report, “GANs have been put to use creating realistic-sounding speech and photorealistic fake imagery. In one compelling example, researchers from chipmaker Nvidia primed a GAN with celebrity photographs to create hundreds of credible faces of people who don’t exist.” They can also reshape images to realistically “make sunny roads appear snowy, or turn horses into zebras.”

As a step forward in general machine intelligence, this means “AI may gain, along with a sense of imagination, a more independent ability to make sense of what it sees in the world.”

BABEL-FISH EARBUDS

The $159 Google earbuds called Pixel Buds connect with Pixel smartphones and the Google Translate app to enable almost real-time translation of a number of languages.

It works like this. You put in the earbuds and speak to your friend in, say, English. Your friend has their phone open to the Translate app, and it translates what you are saying, aloud on the phone, in whatever language is native to your friend, perhaps Chinese. Then your friend responds aloud in Chinese and you hear the translation of their response in your earbuds. To control the pacing, you tap and hold the right earbud while you are talking so you both can maintain eye contact throughout the conversation.

The two partners currently ironing out some of the wrinkles in the system are Google and Baidu.

ZERO-CARBON NATURAL GAS

Natural gas is responsible for more than 30% of U.S. electricity generation and 22% of the world’s needs, and even though it’s cleaner than coal, it does produce at these levels substantial carbon emissions.

The ideal solution to the problem would be a system that could produce carbon-free energy using fossil fuel at a cost that doesn’t make it all prohibitive.

A pilot power plant outside of Houston is working toward that ideal and hopes to be ready online with its solution in three to five years. The project is Net Power, and it’s a collaboration between 8 Rivers Capital, Exelon Generation, and CB&I, an energy construction firm.

The basic principle at work involves capturing the carbon dioxide released from burning natural gas, pressurizing and heating it, and then using the supercritical CO2 as a “working fluid” to drive a specially built turbine. The CO2 is recycled over and over until the remaining gases can be economically captured and not released.

This project is described by the editors as “one of the furthest along to promise more than a marginal advance in cutting carbon emissions.”

PERFECT ONLINE PRIVACY

Cryptocurrency organization Zcash Company, along with JP Morgan Chase and ING, has been working on a new blockchain protocol that uses the zero-knowledge proof, which can guarantee the privacy of clients who use blockchains in Zcash cybercurrency transactions and payment systems at the banks. Normally, with blockchain systems like the one used by Bitcoin, transactions are public and visible to all users.

Zcash’s zero-knowledge system lets users do transactions anonymously. The zk-SNARK protocol developed by Zcash uses cryptography to guarantee the validity of the transactions while hiding the identity of the sender, the recipient, and other transactional details. The name zk-SNARK stands for “zero-knowledge succinct non-interactive argument of knowledge.”

The Zcash Company was founded in 2016 by a team that included the cryptographer Matthew D. Green from Johns Hopkins University. The zero-knowledge cryptography protocol itself is much older, first appearing in an academic paper in 1985. The current interest in cryptocurrencies has attracted renewed enthusiasm, and in 2017 JPMorgan Chase included zk-SNARKs in its blockchain-based payment system.

Currently, the protocol is slow, it requires substantial processing, and it isn’t yet widely deployed. The editors, however, cite Vitalik Buterin, founder of the Ethereum cryptocurrency, who has described zk-SNARKs as an “absolutely game-changing technology.”

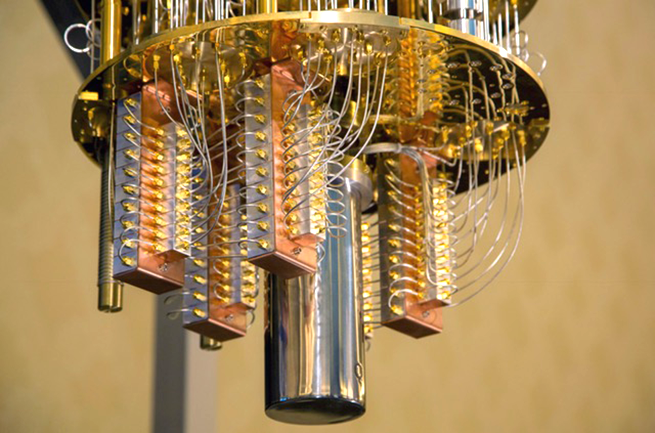

MATERIALS’ QUANTUM LEAP

The most powerful computers ever conceived have now appeared on the distant horizon. They’re predicted to become fully functional within the next five to 10 years, but they remain paradoxical, as researchers look ahead and guess what we might apply their considerable powers to.

IBM, the editors note, has already simulated the electronic structure of a small molecule using a seven-qubit quantum computer they’ve built, and this has led some to conjecture on the ability of humans to one day design molecules for a truly better living through chemistry. (Note: Our current computers use bits, which can only be a 0 or a 1. Qubits can be 0 or 1, or both at the same time.) A quantum computer will need 1,000-qubit power to enter that realm of processing—today, we’re still in the single digits. There’s more information in the article “Serious quantum computers are finally here. What are we going to do with them.”

Quite an impressive, and sometimes unsettling, list from the academic tech center on the Charles River. The one consistent note, from year to year, on the MIT Technology Review seems to be that significant change is ever accelerating.

You can look over this year’s complete list and every other annual posting, going back to year one, which was in 2001: www.technologyreview.com/lists/technologies/2018/